Emotion Recognition in the Wild via Convolutional Neural Networks and Mapped Binary Patterns

Published in Proc. ACM International Conference on Multimodal Interaction (ICMI), Seattle, 2015

Recommended citation: Gil Levi and Tal Hassner. Emotion Recognition in the Wild via Convolutional Neural Networks and Mapped Binary Patterns. Proc. ACM International Conference on Multimodal Interaction (ICMI), Seattle, 2015.

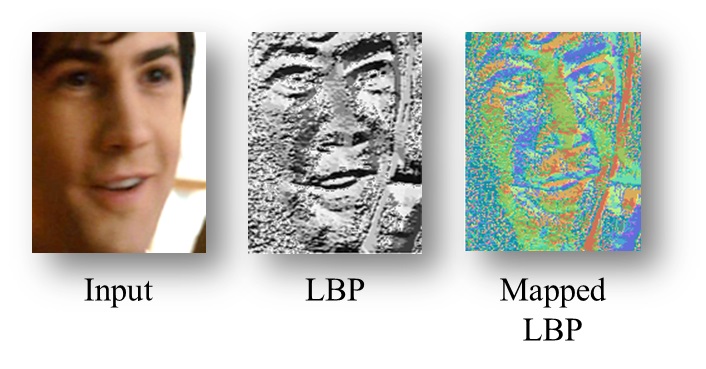

Image intensities (left) are converted to Local Binary Pattern (LBP) codes (middle), shown here as grayscale values. We propose to map these values to a 3D metric space (right) in order to use them as input for Convolutional Neural Network (CNN) models. 3D codes in the right image are visualized as RGB colors.

Abstract

We present a novel method for classifying emotions from static facial images. Our approach leverages on the recent success of Convolutional Neural Networks (CNN) on face recognition problems. Unlike the settings often assumed there, far less labeled data is typically available for training emotion classification systems. Our method is therefore designed with the goal of simplifying the problem domain by removing confounding factors from the input images, with an emphasis on image illumination variations. This, in an effort to reduce the amount of data required to effectively train deep CNN models. To this end, we propose novel transformations of image intensities to 3D spaces, designed to be invariant to monotonic photometric transformations. These are applied to CASIA Webface images which are then used to train an ensemble of multiple architecture CNNs on multiple representations. Each model is then fine-tuned with limited emotion labeled training data to obtain final classification models. Our method was tested on the Emotion Recognition in the Wild Challenge (EmotiW 2015), Static Facial Expression Recognition sub-challenge (SFEW) and shown to provide a substantial, 15.36% improvement over baseline results (40% gain in performance)*.

* These results were obtained without training on any benchmark for emotion recognition other than the EmotiW’15 challenge benchmark. To our knowledge, to date, these are the highest results obtained under such circumstances.

Downloads

This page provides code and data to allow reproducing our results. If you find our code useful, please add suitable reference to our paper in your work. Downloads include:

- Python notebook for example usage.

- Zip file with code for converting RGB values to our mapped LBP codes.

- Link to public dropbox zip file with trained CNN models. The models include VGG_S trained on RGB and the four mapped LBP-based representations described in the paper. Warning, ~2GB file!

- A Gist page for our trained models, is being uploded to the BVLC/Caffe Model Zoo.

What’s New

20-November-2017: Fixed broken links to Python notebook and CNN models.

14-December-2015: Git repository added with sample code, meta-data files and instructions.

Copyright and disclaimer

Copyright 2015, Gil Levi and Tal Hassner