Navigating Text-to-Image Generative Bias across Indic Languages

Published in European Conference on Computer Vision (ECCV), Milan, Italy, 2024

Recommended citation: Surbhi Mittal, Arnav Sudan, Mayank Vatsa, Richa Singh, Tamar Glaser, Tal Hassner. Navigating Text-to-Image Generative Bias across Indic Languages. European Conference on Computer Vision (ECCV), Milan, Italy, 2024.

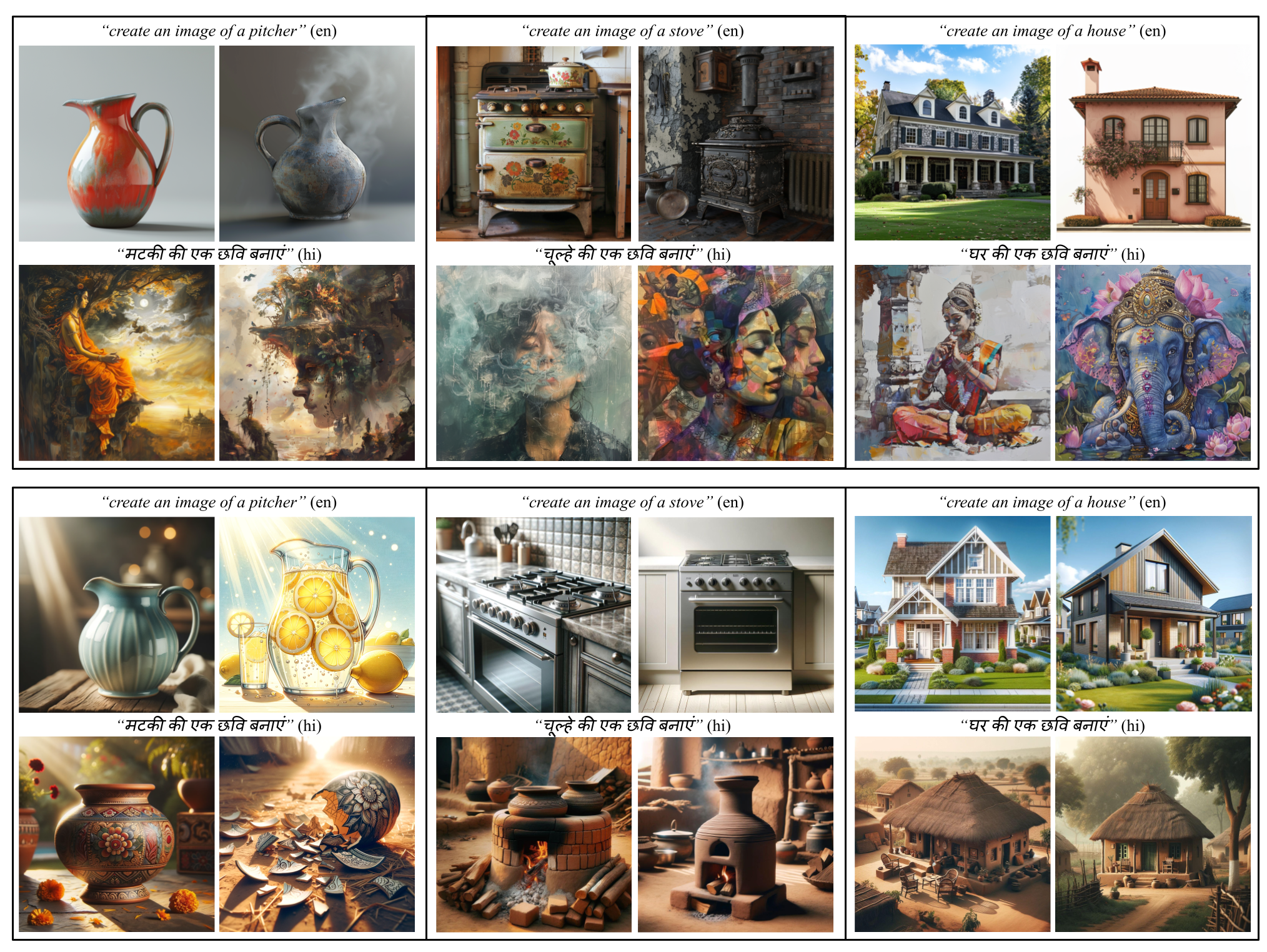

(Top) Images generated by Midjourney when given equivalent prompts in the English and Hindi languages highlighting the tendency of the model to generate incorrectly. (Bottom) Images generated by DallE-3, when given equivalent prompts in the English and Hindi languages, highlight astonishingly different cultural representations.

Abstract

This research investigates biases in text-to-image (TTI) models for the Indic languages widely spoken across India. It evaluates and compares the generative performance and cultural relevance of leading TTI models in these languages against their performance in English. Using the proposed IndicTTI benchmark, we comprehensively assess the performance of 30 Indic languages with two open-source diffusion models and two commercial generation APIs. The primary objective of this benchmark is to evaluate the support for Indic languages in these models and identify areas needing improvement. Given the linguistic diversity of 30 languages spoken by over 1.4 billion people, this benchmark aims to provide a detailed and insightful analysis of TTI models’ effectiveness within the Indic linguistic landscape.

BibTex:

@inproceedings{Mittal2024Navigating,

title={Navigating Text-to-Image Generative Bias across Indic Languages},

author={Mittal, Surbhi and Sudan, Arnav and Vatsa, Mayank and Singh, Richa and Glaser, Tamar and Hassner, Tal},

booktitle={European Conference on Computer Vision (ECCV)},

year={2024},

address={Milan, Italy},

month={October}

}